Data is the New Oil. Let’s Be Thoughtful Laying the Groundwork for the Next Century

Data will underpin human progress just as fossil fuels did before. Given the massive upcoming datacenter buildout, we should be thinking about sustainability today.

There are two seemingly separate super-cycles driving much investment today: AI and sustainability. A while back I wrote about the importance of thinking of these together. I worried that the excitement about AI would distract investors – especially the venture community – from tackling the challenge and opportunity of climate change. However, one of the intersections of these two trends is an incredibly attractive investment opportunity AND one of the biggest levers to build a sustainable future. Datacenters are growing at a rapid pace, and AI is increasing performance demands within them. This current acceleration of buildout and transformation of the massive data industry is the ideal time to consider sustainable expansion approaches. Data could become one of the largest single sources of global energy demand, thus it seems worthwhile to be thoughtful now to minimize the unintended consequences of our development path.

A quick look back…

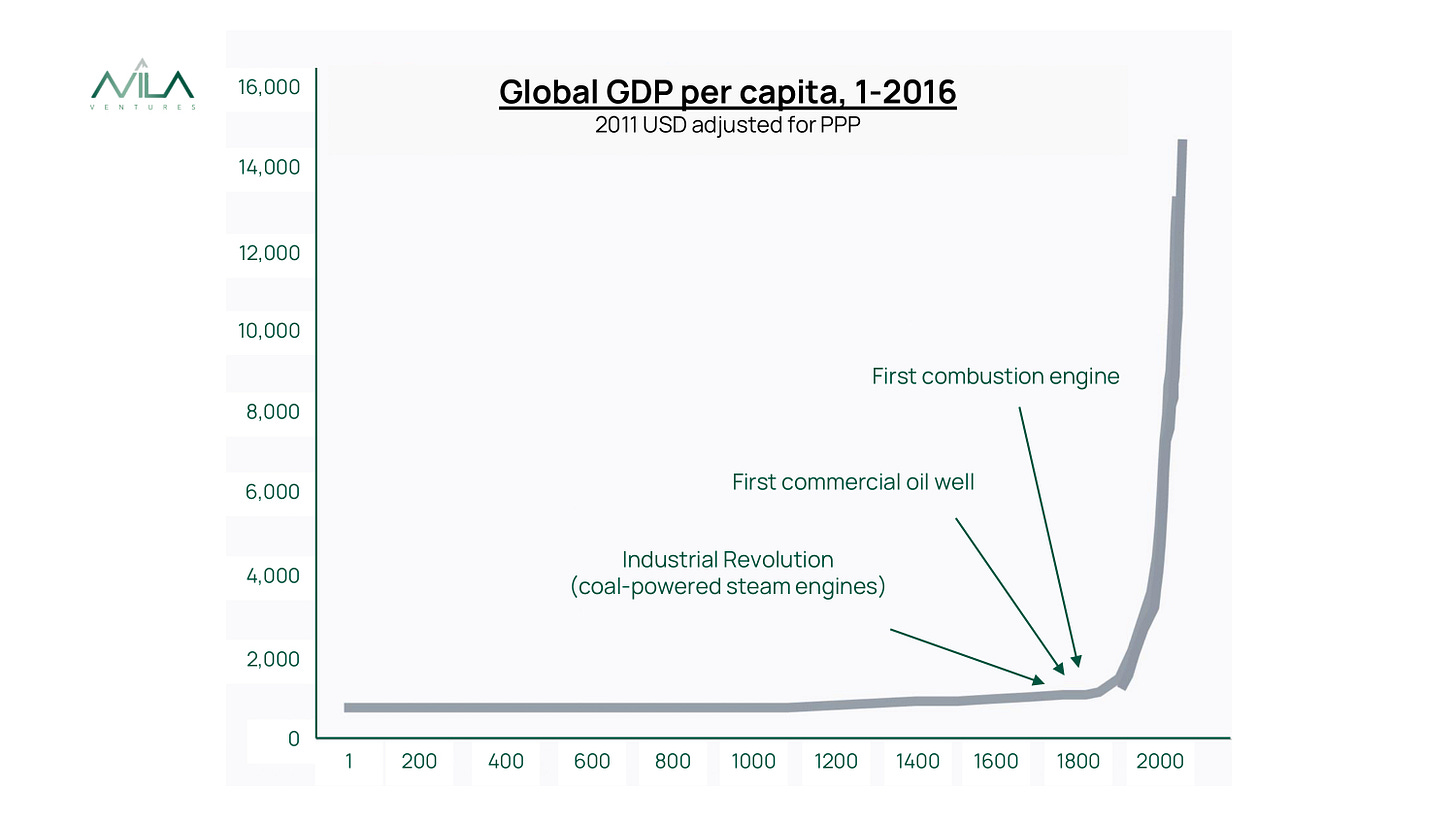

Without fossil fuels, humanity’s extraordinary rise in standard of living over the past two centuries would not have been possible. After thousands of years of minimal progress (see Figure 1), the discovery of cheap and abundant energy changed everything, kicking off the Industrial Revolution and powering a 15x increase in GDP/capita to present days, compared to 1.4x increase in the 1,800 years prior.

But had we known there was a big environmental cost much further down the road, perhaps we could have scaled up greener energy sources to achieve the same benefits without the cost. One could argue that fossil fuels are not inherently better than solar, wind, nuclear, and other green alternatives, but rather have benefited from over a century of scale and efficiencies in production and distribution. As a result, today the energy transition is costly, requiring “green premiums” to take technologies way behind on the scale and experience curve to the cost level of coal, gas, and oil.

Now let’s turn to data. Energy will continue to be fundamental for economic development, but if we hope for human prosperity to advance at the rate of the past two centuries, it will have to come from the power of data. Information technology already transformed our way of life in the past century, and going forward it seems unavoidable and self-evident that it will continue to take over more of our lives and economy, perhaps even more exponentially.

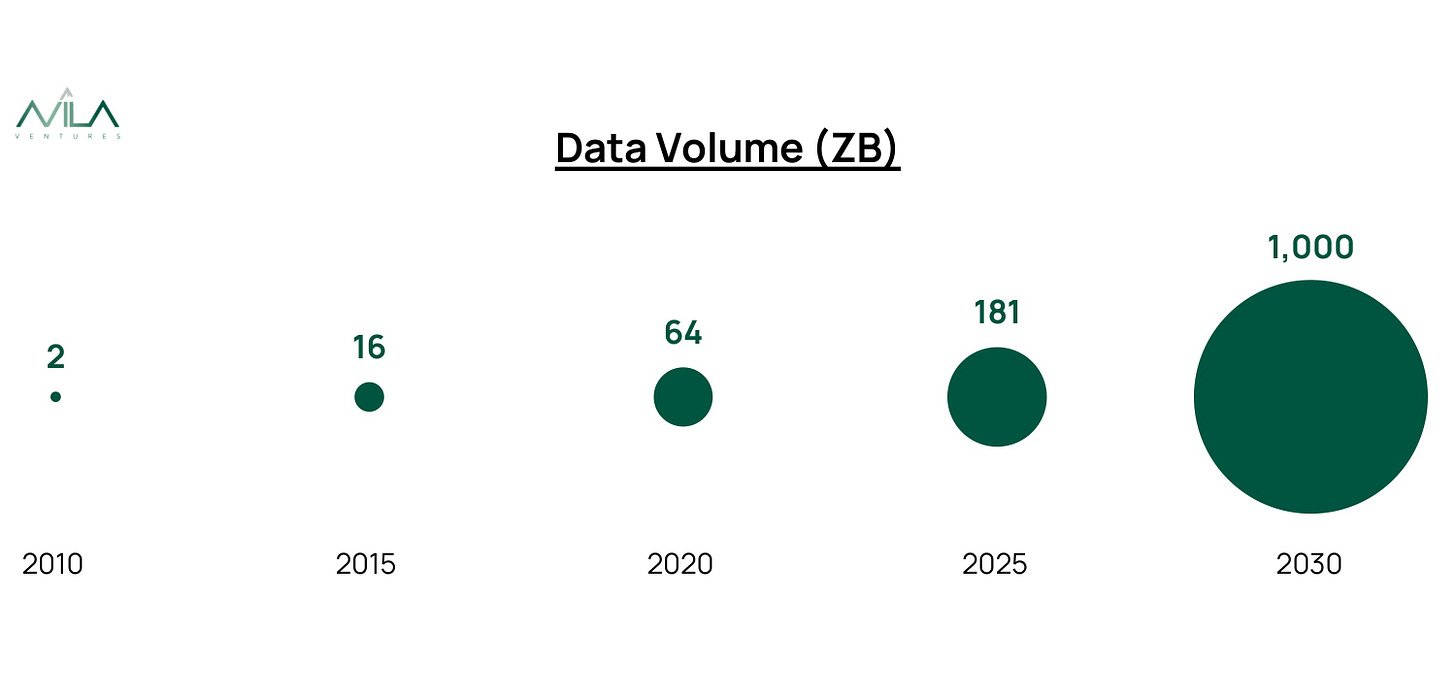

It is hard to imagine our daily routines and our thriving economy with an Internet outage. Humanity’s production and usage of data is growing exponentially (see Figure 2). And as we look ahead, the increasing value of data, the immersive entertainment and social applications, and the advent of AI portend an even greater share of our daily lives and our economic output dependent on data.

If data is the new oil - meaning as deeply entrenched in our way of life and as fundamental to our economic progress - shouldn’t we try this time to build the infrastructure sustainably given what we already know? It would be a completely unforced error to deploy billions in hard assets sub-optimally, thus creating more environmental impact than needed and signing up for a green premium down the road.

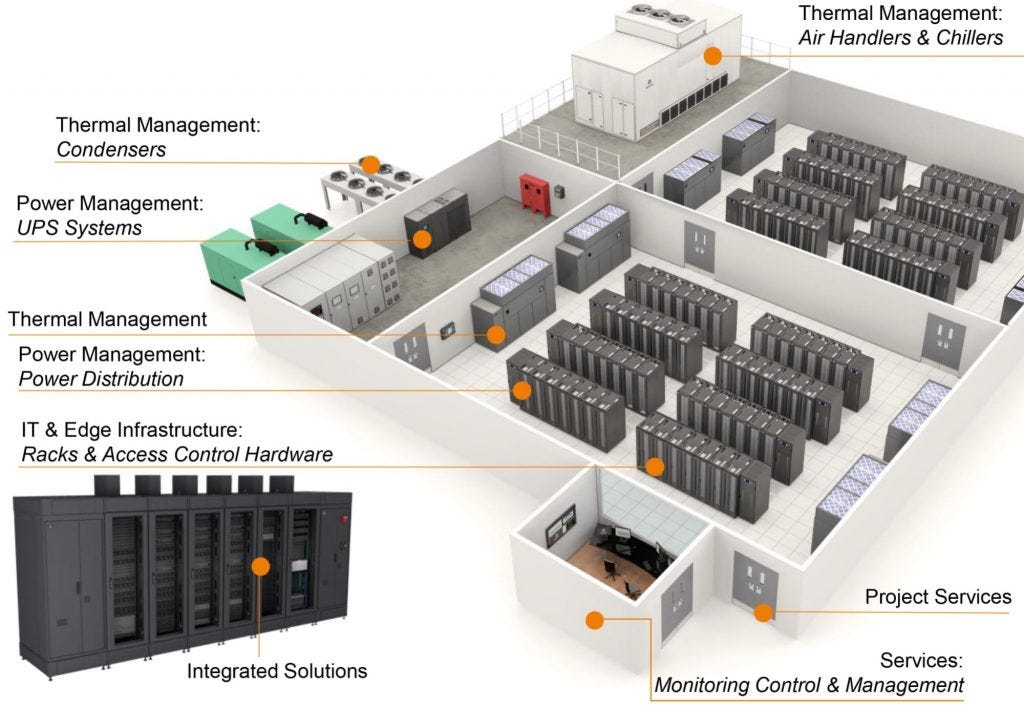

What is this data infrastructure? Datacenters (“DCs”). Datacenters are facilities that power, interconnect, and house IT hardware and data (see Figure 3).

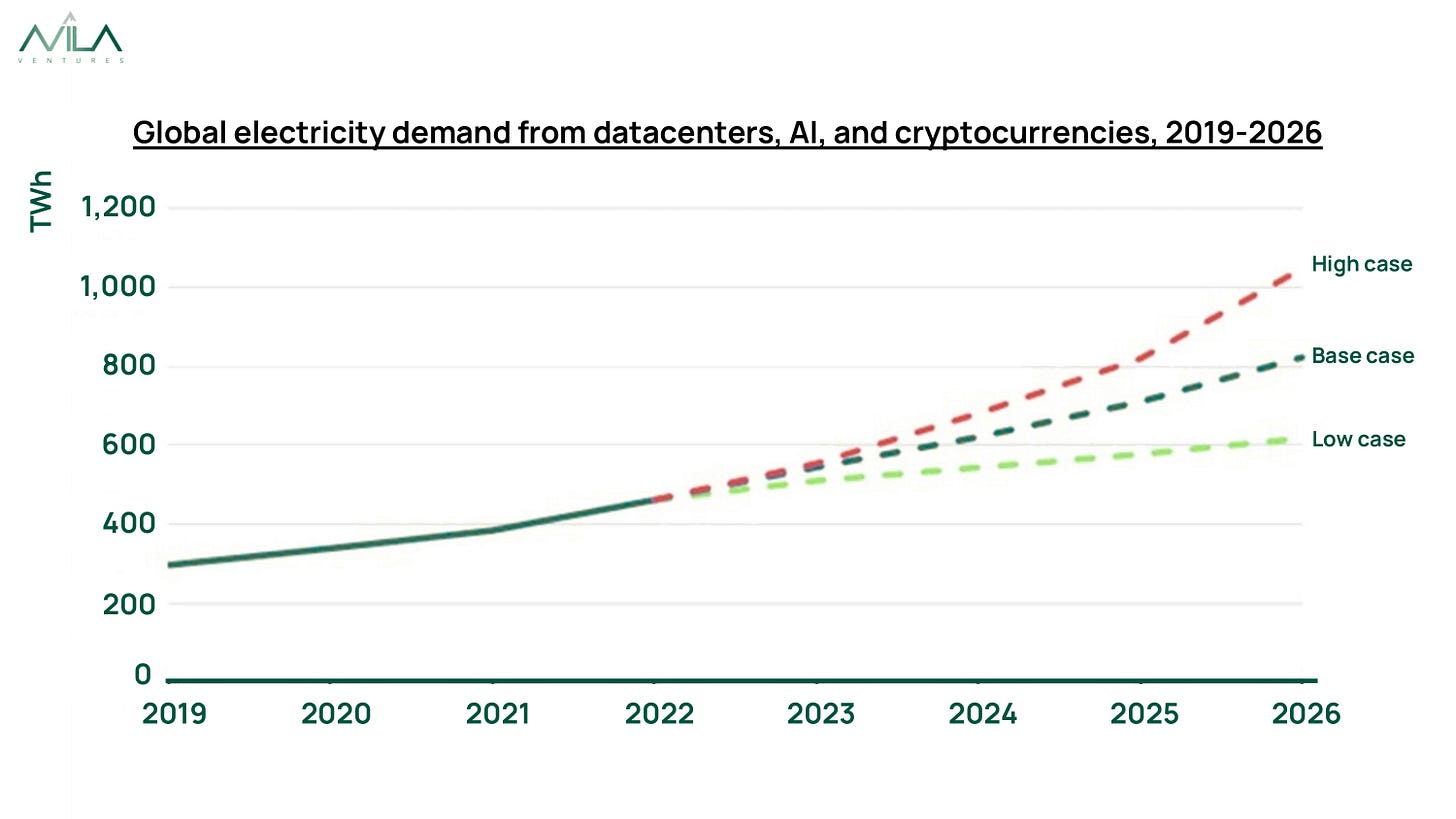

Today they consume about 2% of global electricity (see Figure 4), and experts forecast as high as 13% in the next decade. While historically efficiency gains have resulted in much lower than forecast energy demand increases, it is hard to assume continued major efficiency gains as they have stalled in recent years.

Some alarmists are calling for controls on data usage. At Avila, we believe this is both pointless and counterproductive. While we will leave for a separate discussion the human/social implications of increasingly immersing ourselves in a digital world and allowing machine black-boxes to drive our actions (which should foster a critical discussion of appropriate guardrails), from an environmental standpoint we acknowledge that efforts to curb data growth would likely be futile and, perhaps more importantly, we believe there are positive second-order effects of data growth for the environment, as (a) the increased energy will likely displace in many cases even bigger energy use cases (i.e. a digital banking transaction displaces a drive and usage of a physical location with a bigger carbon footprint, a digital encounter displaces a physical world encounter) and (b) the surplus of economic growth enhances our ability to finance green premiums elsewhere.

So if we accept many more datacenters will be needed, how should they be built?

For this, we need a brief sidebar on datacenters.

Datacenters 101

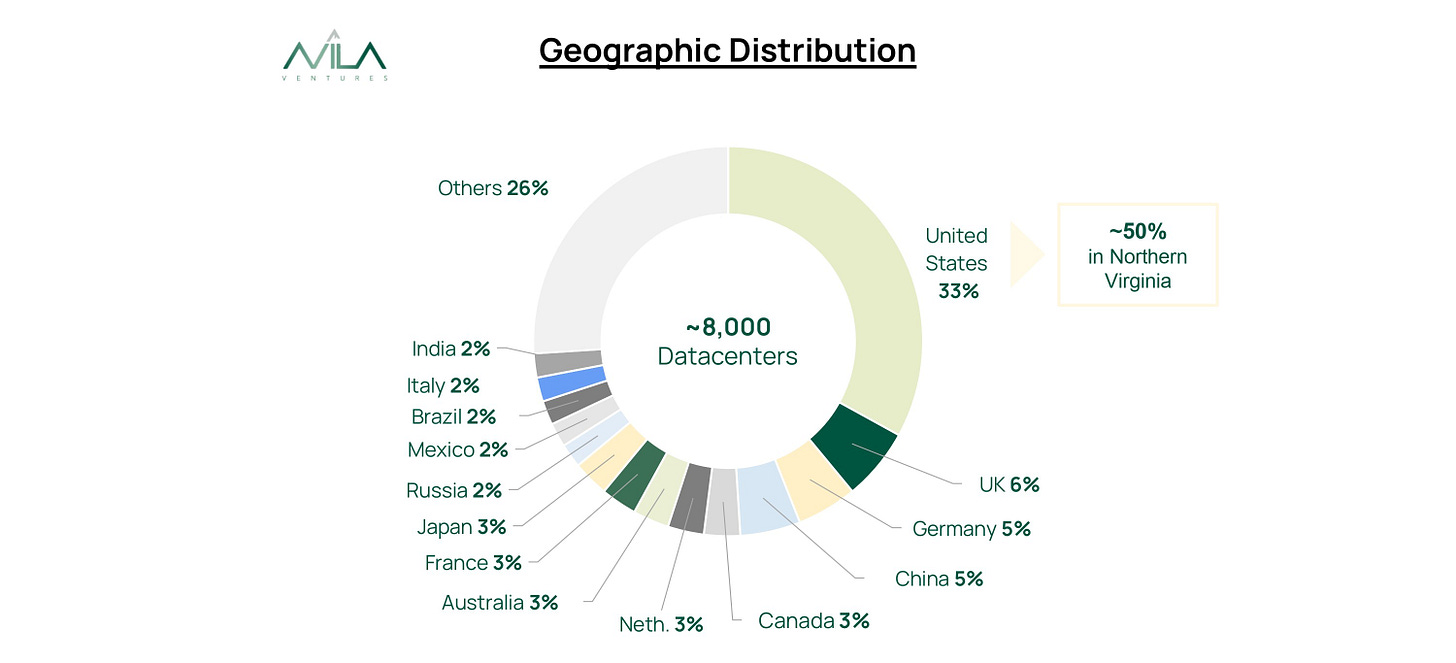

Datacenters are a $360B business, growing at ~15% annually. It is a global business, currently heavily weighted to the US, and concentrated in specific locations (see Figure 5).

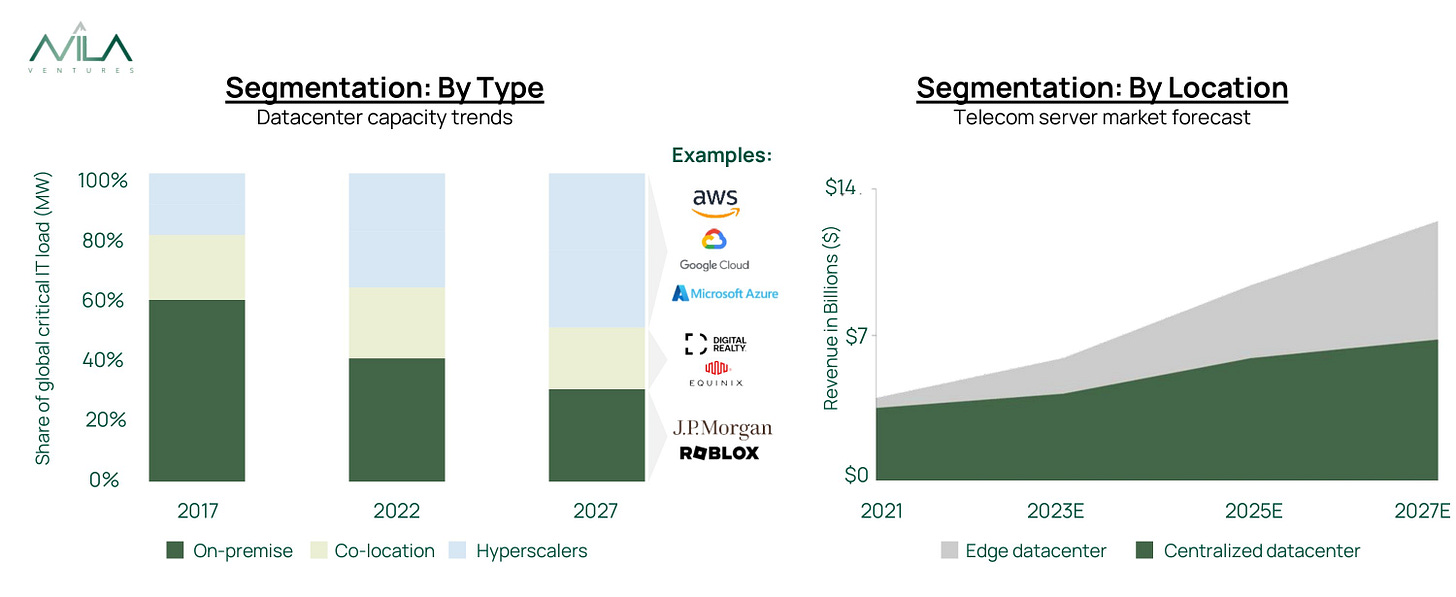

It can be segmented in multiple ways, most importantly for our purposes as follows: (1) By Type: hyperscalers (very large, hyper-efficient cloud-service providers such as Amazon’s AWS, Microsoft’s Azure and Google’s Cloud Platform), on-premise (owned or self-managed datacenters), and co-locators (real estate players that service multiple tenants leasing their own datacenter solutions), and (2) By Location: centralized (remote facilities, typically large and efficient), or at the edge (near the end users, typically small or even modular datacenters) (See Figure 6).

Perhaps the biggest story of the last decade in the DC space has been the rise of the hyperscalers, driven by the shift to the cloud and the efficiency gains arising from their streamlined operations. Due to their centralized, large scale as well as the need to site some operations closer to the end users, edge computing is also expected to grow. However, edge solutions need to adapt to localized environments, space constraints, regulatory variances, etc. This means a completely different approach.

It’s worth noting here that the above segmentations of the datacenter space are overly simplistic because customers often use different data solutions across their businesses, and companies may play in more than one segment at a time. Even the more standardized and vertically integrated hyperscalers often mix and match solutions and service providers across the value chain. For on-premise solutions, co-locators, and the burgeoning edge computing space, this is infinitely more complex as multiple configurations of business and services exist.

In summary: the rise of the hyperscalers, and the upcoming rise in edge computing and AI applications are key determinants of sustainability going forward.

What Is Sustainable? What Gets Measured Gets Improved

Measuring resource usage is where efficiency begins.

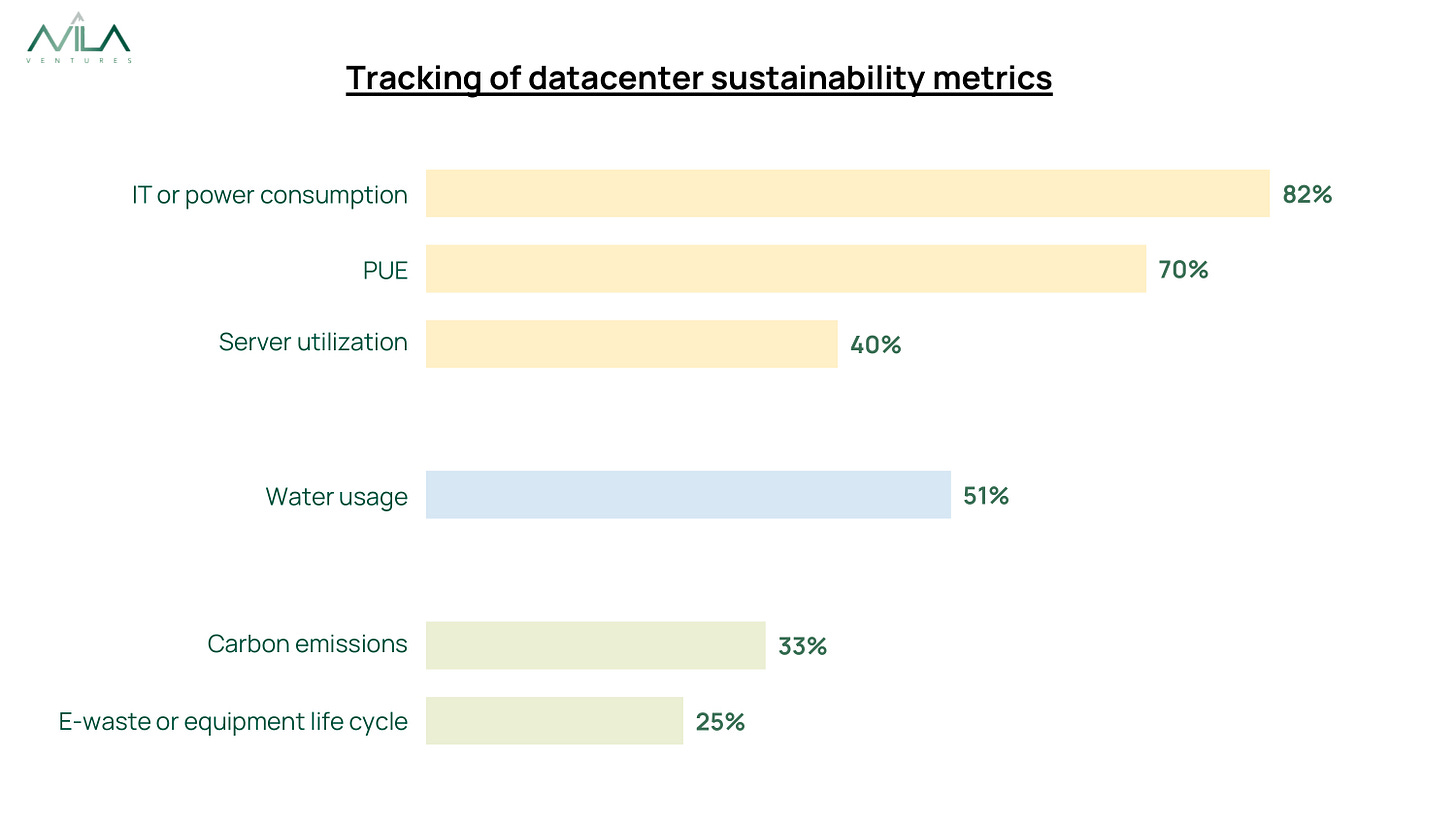

The most important lever in energy efficiency is the data processing equipment efficiency. Some is driven by the server/chip design, and this is the work of the large chip designers whose focus is on performance and efficiency, each with varying degree of importance depending on the application. At the datacenter level, managing Server Utilization is the biggest opportunity, and yet surprisingly few operators track it, let alone invest in improving it.

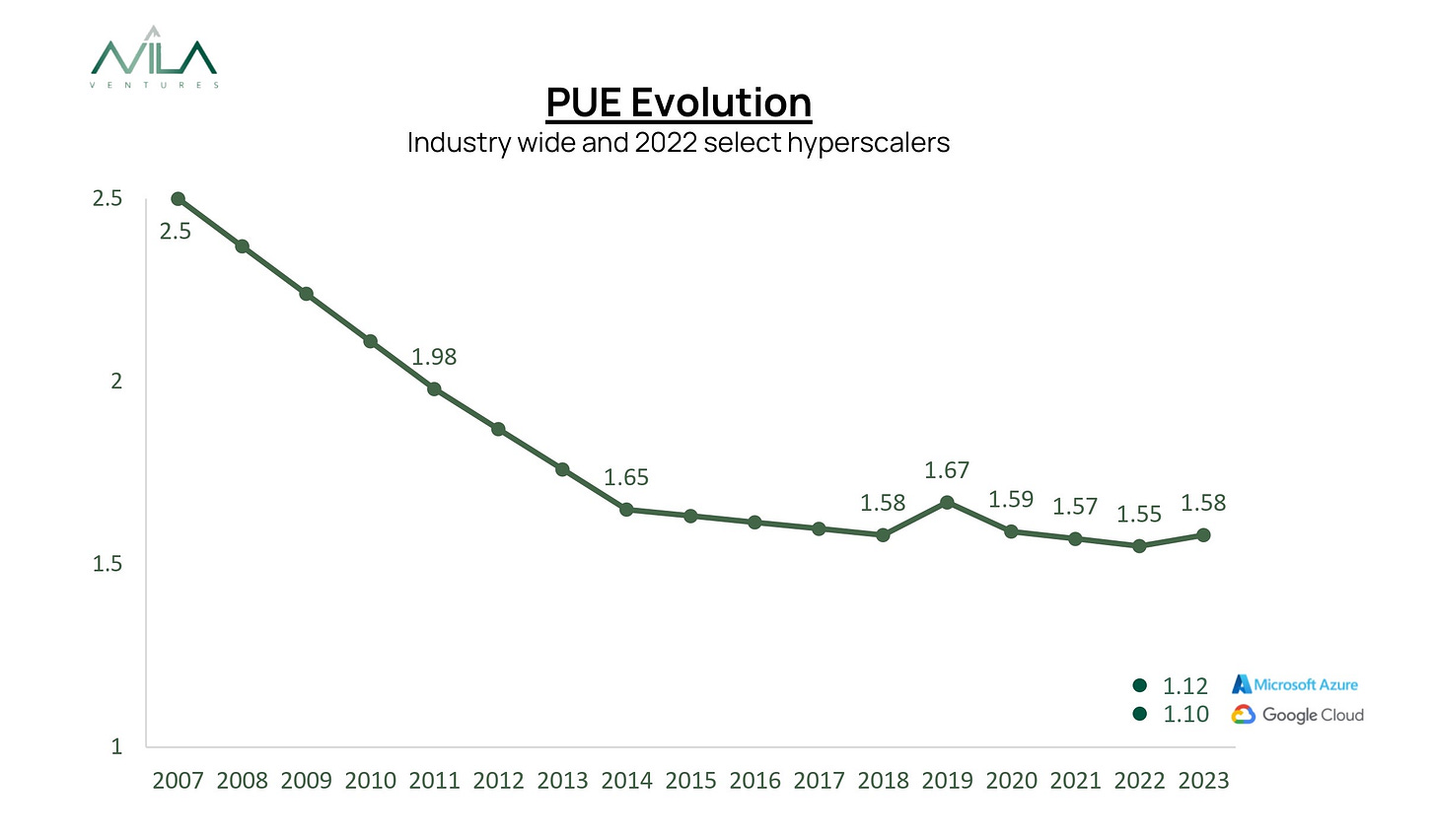

PUE (Power Usage Effectiveness) is the standard sustainability metric, calculated as the amount of power needed to operate and cool the datacenter by the amount of power drawn by the IT equipment in the datacenter (ideal PUE level = 1). While PUE is not perfect as it doesn’t capture the efficiency of IT equipment noted above, it still remains a useful proxy and an important tool to focus on optimizing all other energy uses in datacenter facilities.

PUE levels in datacenters declined rapidly in the previous decade driven mainly by the rise of the hyperscalers and improvements in air cooling technologies, but have remained flat for the last five years at an average annual PUE of 1.58, mainly due to the increasing heat generated by new, power-hungry servers pushing air-cooling capabilities to their limits. Luckily, some hyperscalers are already reporting PUEs around 1.1, in line with their aggressive net-zero targets (See Figure 7). But this might not last with the rise of AI without changes in datacenter configurations.

Water Usage Effectiveness (WUE) is the measure of the amount of water used to cool IT assets. Reducing water consumption has only recently become an important consideration for sustainable datacenters. The rise of the hyperscalers and drought concerns in various geographies with large datacenters have belatedly given rise to awareness of the significant water consumption of the traditional air cooling systems used in the facilities. Going forward, water is unlikely to remain “free”, and thus conservation will likely increase in importance.

As a first step, encouraging and/or demanding tracking of statistics (see Figure 8) should spur efficiency gains.

Will simply tracking metrics be sufficient to push towards more sustainable solutions? We certainly do not believe so. The benefits of metrics improvement must translate in some way to the bottom line to spur change. But given how far hyperscalers’ efficiency gains have translated into lower costs and thus increased share, the rest of the market seems poised to improve simply tracking and attempting to close the gap. Furthermore, metrics understood by the market can also spur positive change - see for example

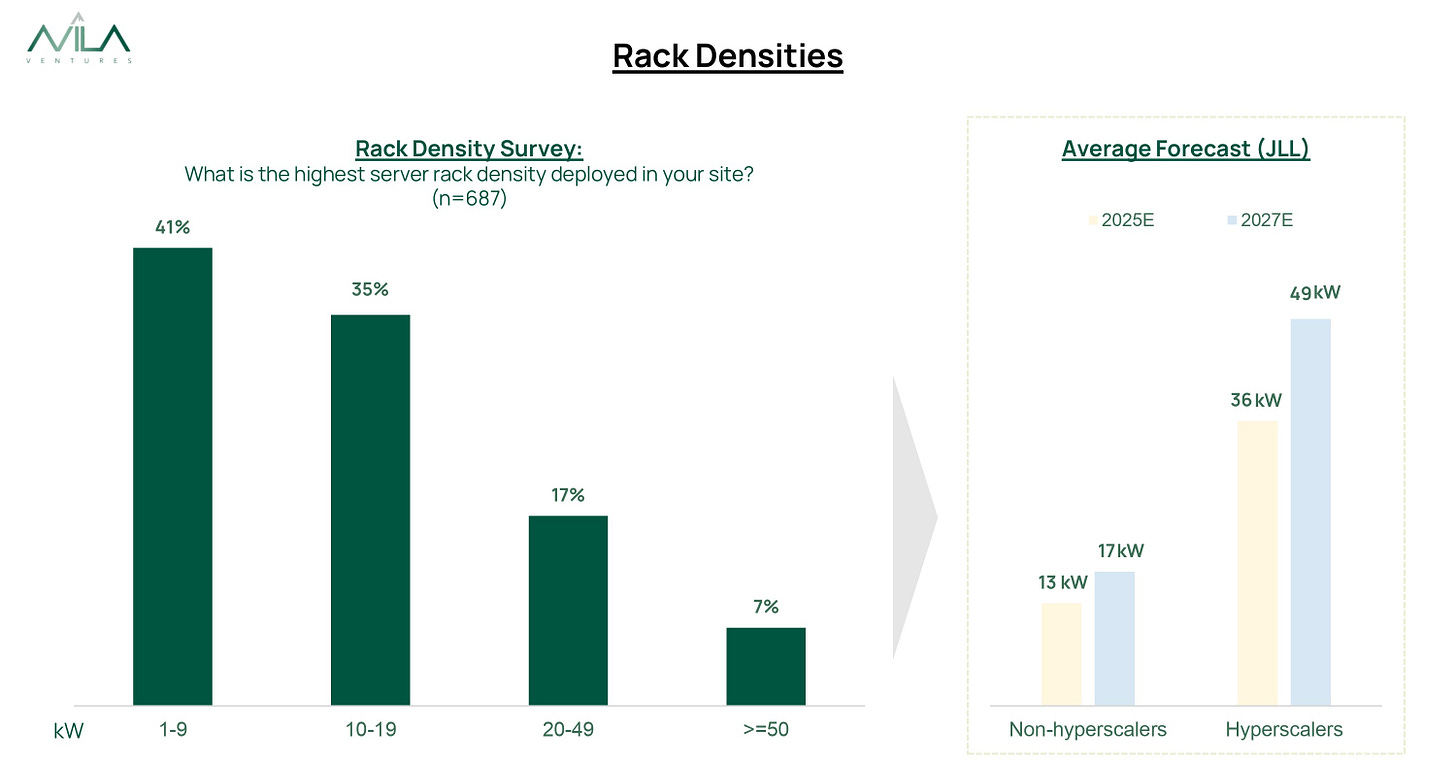

’s Click Clean Scorecard.Beyond metrics tracking, strong market forces are aligned with sustainability goals now. Why? the increasing power density (measured in kW per rack) for applications such as AI is demanding new forms of cooling. Although the vast majority of rack densities are well below 20 kW and will likely remain so for some time, an increasing proportion of racks will exceed 50 kW, especially at the hyperscalers (see Figure 9). In addition, rising energy prices and market + regulatory concerns around sustainability are also creating tailwinds for more sustainable approaches.

Datacenter Cooling

Industrywide, more than half of DC energy demand is for ancillary systems besides the actual data processing. Given that for hyperscalers the data processing represents 80% or more, the remaining DCs likely have meaningful opportunities for improvement (see Figure 10).

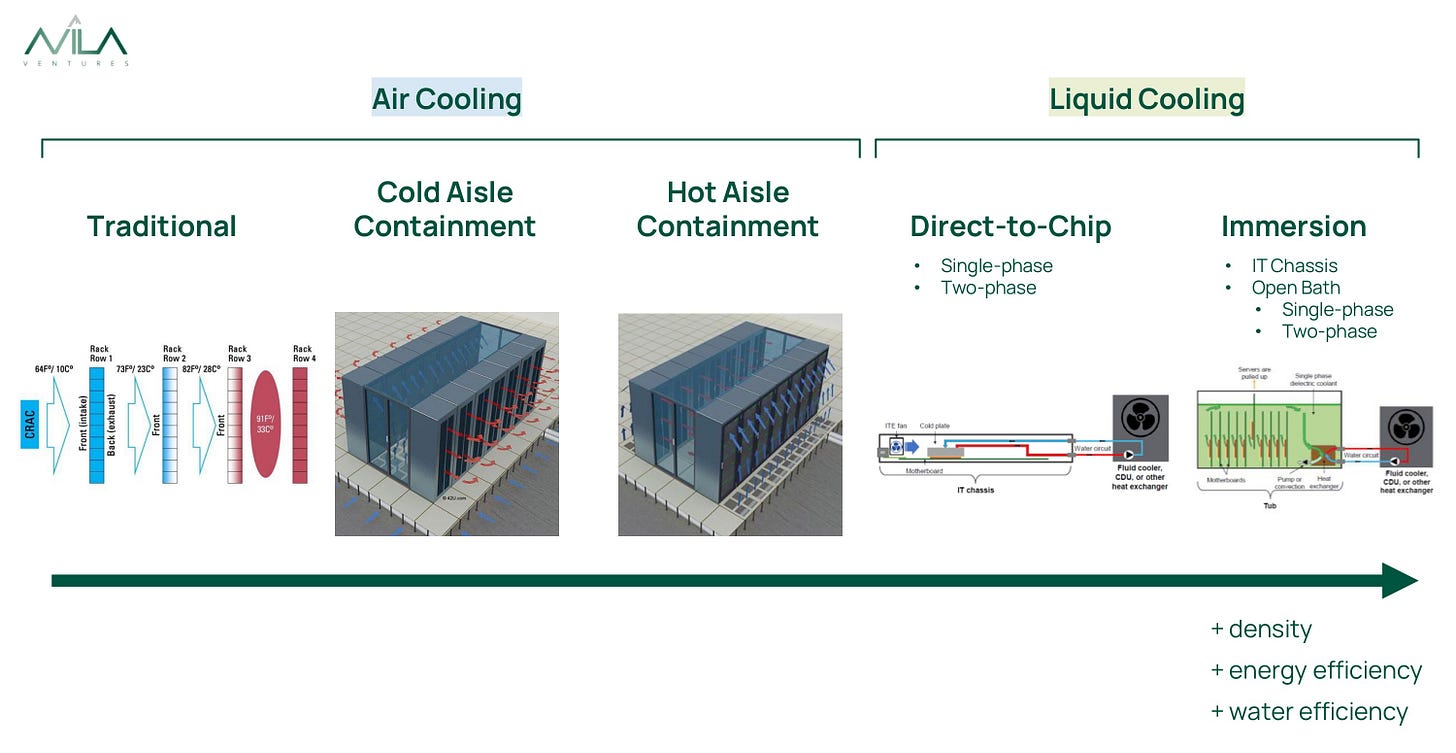

Datacenter equipment must be cooled to work efficiently. Air cooling has been the traditional and still remains by far the most common cooling option. However, liquid cooling is more efficient, as liquids have a much greater capacity to capture heat by unit volume. Liquid cooling can handle higher rack densities (air is only effective up to ~20kW per rack), it is more energy and water efficient, and reduces sound levels and improves dust and dirt exclusion. Liquid cooling hasn’t seen broader adoption yet mainly because of lack of standardization, retrofitting expenses (liquid cooling requires a quite distinct setup and mechanical and plumbing specs), equipment life cycles (4-5 years) and long-term building leases (~10 years, often with the cost of energy borne by the owner)… and most datacenter infrastructure was built before liquid cooling was an option.

There have already been meaningful improvements to traditional air cooling, mainly cold and hot aisle containment, each with their pros and cons based on the existing infrastructure. However, next-gen performance (and sustainability improvements required liquid cooling. Liquid cooling methods are segmented into: direct-to-chip (“D2C” or “cold plate”) - a liquid coolant, which is never in direct physical contact with the IT equipment, targets high-thermal-emission components (like GPUs) to remove heat, and immersion cooling - sealed IT equipment is fully or partially immersed in a dielectric (non-conductive) liquid coolant to ensure all sources of heat are removed (See Figure 11).

Direct-to-chip is easier and more cost-efficient to retrofit than immersion cooling as it allows existing infrastructure to remain almost unchanged adding cold plates and tubing. Full immersion might become mainstream after several cycles, but to utilize existing infrastructure, comply with 99.99% uptime demands and co-exist side-by-side with existing datacenters (for which the vast majority of space for a long time will still be comprised of low density racks), we believe direct to chip is the right stepping stone within the CapEx deployment cycle.

At Avila, we believe direct-to-chip cooling technologies are the most viable path to transition quickly to a greener datacenter infrastructure within the next decade. At the highly efficient hyperscalers, the shift to direct-to-chip will happen despite the low current PUEs, driven mainly by AI demands

So How Can We Build a Greener Data Economy?

Data Processing — improving the power and efficiency of the chips and the entire datacenter architecture as well as optimizing data management algorithms reduces environmental impact. It is imperative to highlight that is an ongoing challenge, as the increase in processing power unleashes a new cycle of optimizing efficiency (as in the case of the newest chips for AI and other applications, which take cooling needs to a heightened level).

Cooling — as on average 40% of DC energy demand is for cooling, so optimizing this is the next biggest lever. Given the huge disparity in cooling efficiency across DCs, it is clear that upgrading older infrastructure is a big lever in improving industrywide performance, as newer facilities have much lower PUEs (admittedly some of this is attributable to scale, but not the majority). In addition, transition to best-in-class liquid cooling can massively reduce energy and water consumption, and will simply be necessary as we enter a world with more and more high-performance chips (HPCs) as is the case with AI and other applications.

Location — a datacenter’s location is driven by multiple considerations: closeness to fiber and end users, power availability and costs, resiliency of location, etc. within the matrix of factors to be considered, an important driver of sustainability is climate. A temperate climate would reduce cooling demands and lower the datacenter’s PUE and improve its WUE. Alternatively, if a location has unlimited access to clean energy (either because solar is locally readily available with cost-effective storage, or we have cracked the nut on limitless nuclear, geothermal, or other green energy sources), external temperatures becomes a much less relevant factor.

Renewable Energy — Renewable energy (ideally with on-site storage to avoid using variable sources of green energy during periods of high demand) can fully eliminate a DC’s carbon footprint. Switching backup systems (a mission critical part of DCs whose core metric is uptime), from the commonly used diesel generators to greener solutions is also helpful.

Efficient and Greener Construction — centralized, optimally designed and sourced facilities drive maximum sustainability (such as those of the hyperscalers). When business needs require solutions that cannot achieve efficiencies via scale such as data at the edge, pre-fabricated modular solutions can at least provide the scale and efficiency gains of replicability, and minimize impact on crowded, urban environments.

Circularity/Recycling — given the rapid obsolescence of the equipment inside datacenters, optimizing their end of life can deliver big economic and sustainability benefits.

Roles and Investment Opportunities Across the Board

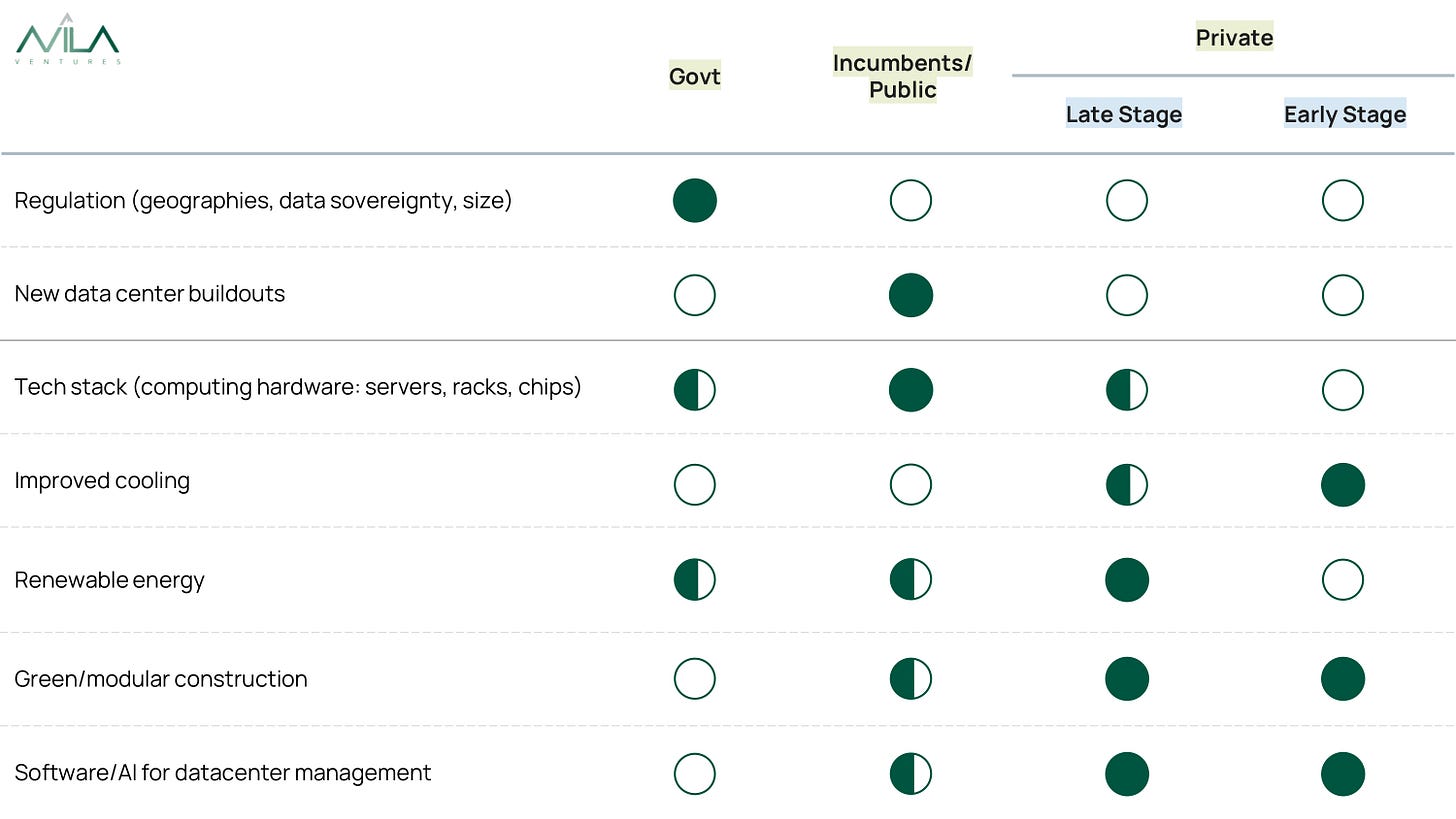

Many actors have roles to play in advancing the roadmap for the data economy. Below (See Figure 12) we attempt to summarize where each player can have maximum impact.

Governments can set the stage for optimal buildout with the right frameworks and incentives. Sustainability may not be the driving force behind these – national security, competitive advantage, and data sovereignty might be important forces for regulatory actions and incentives - but considered parameters today can protect our planet and become a competitive advantage over time. Altogether regulatory frameworks and governmental actions it can have massive implications for the datacenter industry, especially as it relates to location.

Incumbents in the datacenter technical stack (compute equipment manufacturers like NVIDIA, Intel, HP, Dell, etc.) have huge opportunity to drive core efficiency gains in the data processing with new chip designs and server configurations. While not impossible for a new player to emerge, the barriers to entry in terms of talent, capital, and infrastructure are nearly insurmountable.

Large datacenter real estate players (often public companies or private-equity owned) are major drivers in the buildout. This exceedingly attractive commercial real estate sector - especially in the current macro against other real estate asset classes - is attracting large swaths of capital, and thus now is a unique time to make the right decisions with long-term impact, such as datacenter configuration and energy sources. In conjunction with the big service providers (such as Schneider Electric, Vertiv, etc.) they can tilt the industry in a constructive direction, as they serve as advisors in the buildout and operation of the industry, and often provide the expertise and guidance to establish the roadmaps for companies preparing for the unknown demands of AI, general computing, sustainability, etc.

End customers (especially the hyperscalers) are ultimately the ones driving the decision-making. Their commitment to sustainability (perhaps most notably Microsoft as noted in their carbon negative, water positive, and zero waste commitments by 2030) can on the margin influence some of the big CapEx and operation decisions, and drive quicker adoption of green technologies and energy sources.

Finally, the startup community’s opportunity is most compelling in the development of new proprietary technologies that facilitate the advance of both better/cheaper/more powerful computing with increased sustainability.

Request for Startups

At Avila we believe that innovative direct-to-chip cooling, modular edge computing solutions, and software/AI tools to optimize datacenter operations and energy management present extraordinary opportunities for startups to build significant businesses with a positive impact in a dynamic market growing rapidly in importance. If you are working on this please reach out.

And if you have any feedback on this analysis, please reach out we’d love to hear your thoughts.

Many thanks to the industry players across all market segments who shared their insights and views and whose contributions greatly enhanced our understanding of this space.

To discuss or learn more about Avila VC please reach out to us.

Follow us for sporadic postings on LinkedIn and Twitter/X, and Patty Wexler on Medium